Blog

Differentially Private finetuning for LLMs

Tags: differential, privacy, epsilon, ML, Machine Learning

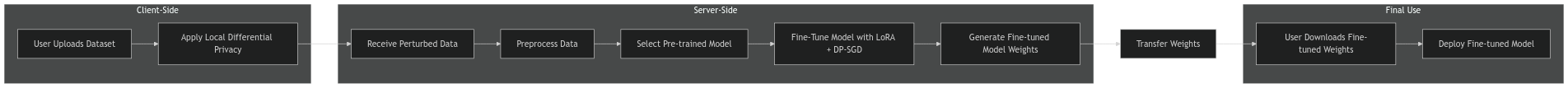

I already explained DP ML in another post 1, so this blog post covers the question, how can we design a service that lets customers finetune Large Language Models in a privacy preserving way.

With the rise of data privacy laws like GDPR, DSGVO and CCPA, companies face increased scrutiny on data handling practices. The demand for privacy-preserving AI models is growing, especially in highly regulated industries. Despite this demand, many businesses lack the in-house expertise to implement their own model fine-tuning. Although there are a lot of third party services for finetuning models, they do not offer any privacy guarantees over the potentially sensitive datasets.

This blog post addresses a potential cloud service offering privacy-preserving LLM finetuning.

Overview

Our goal is to design a system where:

- Users upload their dataset: This dataset may contain sensitive information.

- The dataset is perturbed: This ensures that the original sensitive data cannot be reconstructed.

- The model is fine-tuned: The perturbed data is used to fine-tune a pre-trained model, preserving its utility while protecting privacy.

- Users receive fine-tuned model weights: The resulting model is privacy-preserving and ready for deployment.

Please refer to the complete code and the flask webservice implementation here: https://github.com/anon767/DP_FinetuningService/tree/main

Frontend

To ensure privacy, we perturb the dataset directly in the user’s browser before it is sent to the server. This prevents any sensitive data from leaving the user’s environment. We achieve this by training a Word2Vec (W2V) model and applying noise mechanisms 2.

Thus we train a W2V model on text8 dataset using a vocab of 100k with these parameters:

- AdamW with weight Decay

- 50 dimensions (Needs to be fast for Inference on Browser)

- Embedding clipped to [-1, 1] for calculating Epsilon

- Windows size of 5 and 8 negative samples for Noise Contrastive Loss

- We apply either a Laplacian or Gaussian noise mechanism; initial experiments show Gaussian noise performs better. With an embedding range of two (global sensitivity) and a noise standard deviation of 0.1, our LDP epsilon value is approximately 96.9 (assuming delta = 10^-5).

And eventually we load the Word2Vec model with tensorflowjs in the browser and perturb the text in JavaScript:

|

|

We perturb each word vector with Gaussian noise:

|

|

The cool thing is, the user does not have to trust us up until this point. The cleartext data is not leaving his premises. Given an original text like:

|

|

We send the perturbed vectors that roughly correspond to this text:

|

|

Backend: Fine-Tuning with Differential Privacy

Once the perturbed data reaches the server, the backend fine-tunes the selected model. To prevent the model from memorizing sensitive information, we apply Differentially Private Stochastic Gradient Descent (DP-SGD). Additionally, we use Low-Rank Adaptation (LoRA) to fine-tune only a subset of model parameters, preserving the original performance. By clipping gradients at 1 and adding noise with a standard deviation of 0.1, we achieve an epsilon of approximately 48.45 (assuming delta = 10^-5).

Fine-Tuning with LoRA and DP-SGD

So how does LoRA work3 ? Imagine a feedforward network: Xt=WX(t−1)+b

This gives us: Xt=WX(t−1)+b+WLoRAX(t−1)

So instead of optimizing |outputFeatures|∗|inputFeatures| parameters, we optimize |outputFeatures|∗r+|inputFeatures|∗r parameters. Thus, rank r needs to be sufficiently small, bounded by the harmonic mean of ∣outputFeatures∣ and ∣inputFeatures∣ to ensure the LoRA optimization is efficient and reduces the total parameter count.

We load the selected model and find all modules that can potentially be finetuned by LoRA. We could also hardcode this, but since in the end we don’t know which model the user is going to select we do it dynamically.

|

|

Finally, we can use Opacus to train the LoRA adapters of the LLM with the DP-SGD 4.

|

|

Conclusion

In this post, we have outlined a framework for privacy-preserving fine-tuning of large language models (LLMs). The proposed system allows users to upload sensitive datasets with confidence, as their data remains secure through Local Differential Privacy (LDP) mechanisms applied directly in their browser. Once perturbed, the data is fine-tuned using Differentially Private Stochastic Gradient Descent (DP-SGD) on the server, with Low-Rank Adaptation (LoRA) ensuring efficient and parameter-efficient optimization.

-

Dwork, C., & Roth, A. (2014). “The Algorithmic Foundations of Differential Privacy.” Foundations and Trends® in Theoretical Computer Science. (Comprehensive introduction to DP basics.) ↩︎

-

Hu, E. J., Shen, Y., Wallis, P., et al. (2021). “LoRA: Low-Rank Adaptation of Large Language Models.” arXiv preprint arXiv:2106.09685. (Original paper on LoRA.) ↩︎

-

Abadi, M., Chu, A., Goodfellow, I., et al. (2016). “Deep Learning with Differential Privacy.” Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security (CCS'16). (Foundational work introducing DP-SGD.) ↩︎